The future of computer graphics and interactive techniques driving the multibillion-dollar gaming industry will be front and center at Siggraph 2024, taking place from Sunday, 28 July to Thursday, 1 August at the Colorado Convention Center in Denver.

The 51st annual conference embodies the goals of gaming, as both aim to build new worlds with the minds behind real-time graphics, art, and systems that foster human interactions and spectator experiences.

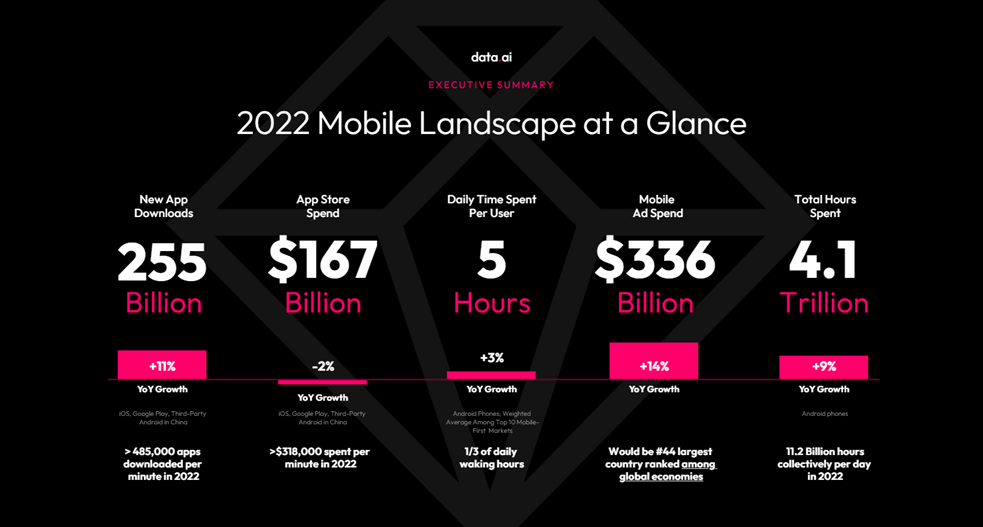

Few industries have captivated the hearts and minds of people worldwide as much as the video game industry, which boasts 3.32 billion gamers, in the ever-evolving world of technology. Game creators continue to make strides with the latest advancements in graphics and visual technologies to related technologies for interactivity, augmented and mixed realities, and generative artificial intelligence (AI). These innovations will be showcased throughout Siggraph 2024 with many programs that weave gaming into the mix including Talks; Courses like Natalya Tatarchuk’s fan-favourite “Advances in Real-Time Rendering in Games Part 1” and “Part 2” which returns with a demonstration of state-of-the-art and production-proven rendering techniques for fast, interactive rendering of complex and engaging virtual worlds of video games; Panels, Appy Hour, the Immersive Pavilion, and Real-Time Live!.

This year marks the 15th anniversary of Real-Time Live!, which invites conference attendees to experience the connectivity and accessibility of real-time applications across industries in a live showcase. “This year, we branched out and are incorporating a lot of different industries and applications,” said Real-Time Live! chair Emily Hsu. “Our goal is to showcase transformative technology in a variety of forms including game technology, real-time visualisation, artist tools, live performances, or as real-world mixed reality (MR) and augmented reality (AR) applications.”

Hsu continued, “There are no slides and no pre-recorded videos. Whatever you are doing has to be run in real time in front of a live audience in six minutes or less. It crosses the whole spectrum of different industries that are doing real-time work. While gaming applications are definitely represented, there are a variety of showcases from universities, well-known companies, and small start-ups.”

A few Real-Time Live! presentations focused on gaming include:

- “Movin Tracin’ : Move Outside the Box” — Movin will unveil an AI-driven, real-time, free-body motion capture technology, utilising a single Lidar sensor for accurate 3D movement tracking. This demonstration redefines multiplayer gaming, showcasing seamless integration of dynamic, precise motion capture with a mere 0.1 second latency and automatic calibration, all compacted within Movin’s advanced system.

- “Enhancing Narratives with SayMotion’s text-to-3D animation and LLMs” — Deep Motion will make this presentation showcasing SayMotion, a generative AI text-to-3D animation platform that utilises Large Motion Models and physics simulation to transform text descriptions into realistic 3D human motions for gaming, XR, and interactive media, addressing animation creation challenges with AI Inpainting and prompt optimisation using a Large Language Model fine-tuned to motion data.

- “Revolutionising VFX Production with Real-Time Volumetric Effects” — Zibra AI will present its ZibraVDB compression technology and Zibra Effects real-time simulation tools to demonstrate the capabilities of creating high-end volumetric visual effects in real-time scenarios on standard hardware to enhance game realism and streamline film production workflows.

In the conference’s Spotlight Podcast, Hsu commented, “We are world builders. We make and create stories, and there’s always going to be a natural part that has to do with storytelling, and sometimes that also means transporting into new worlds. What’s happening in the last several years and into the future is that it’s becoming a little easier, more efficient, and more accessible so we can tell different kinds of stories that haven’t been told before, and that people from anywhere can take technology and mold it and use it to build new worlds.”

Immersive technologies and interactive techniques enhance how we interact and solve pragmatic needs for everyday life at work and at play. Hence at the conference’s Immersive Pavilion, attendees will experience the evolution of gaming, AR, virtual reality (VR), mixed reality (MR), interactive projection mapping, multi-sensory technologies, and more.

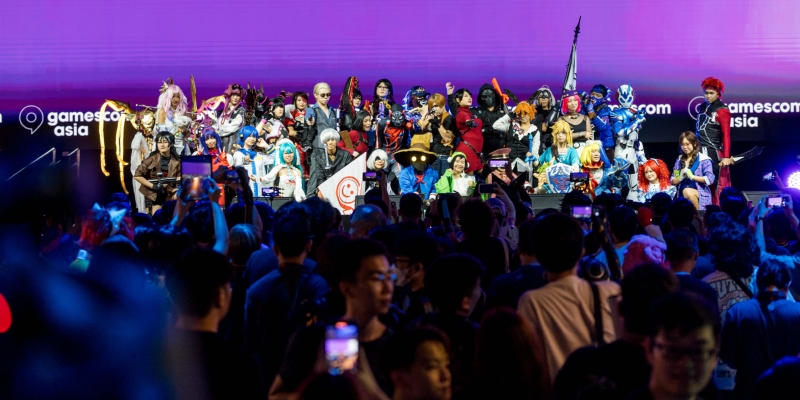

“We always guarantee that everything attendees see, especially anything immersive, has elements they’ve never done and had no experience with before. So, it has that special novelty aspect,” said Siggraph 2024 Immersive Pavilion chair Derek Ham. “I’m very excited about the creators that are thinking about the future of playing from the audience’s perspective to the players’ perspective. When you look at gaming, you now have this platform where people can watch esports or gamers go at it person versus person, or team versus team. We haven’t seen the immersive nature of that for a long time; it’s usually looking at a laptop or screen. The immersive action of the activities and the content, that’s the next level.”

At the pavilion, contributors and participants will engage in critical discourse on the latest interactive breakthroughs and support for hybrid environments. This includes social experiences, games, and artistic expression. The Immersive Pavilion has sought work that reflects the exciting evolution of AR, VR, and MR, particularly in terms of interaction, showing unique features and how they are shaping the future. Eighty submissions came from academic, professional, and independent fronts, and 20 were selected.

A few installations focused on gaming include:

- “MOFA: Multiplayer Onsite Fighting Arena” — Reality Design will present a game framework that uses HoloKit X headsets, exploring the design space of synchronous asymmetric bodily interplay in spontaneous collocated mixed reality. MOFA game prototypes like “The Ghost,” “The Dragon,” and “The Duel,” inspired by fantastic fiction scenarios, demonstrate that the strategic involvement of non-headset-wearers significantly enhances social engagement.

- “Metapunch X: Combing Multidisplay and Exertion Interaction for Watching and Playing E-sports in Multiverse” — The National Taipei University of Technology will present Metapunch X, an encountered-type haptic feedback esports game that integrates exertion interaction in XR with multi display spectating. The game is designed as an asymmetric competition, utilising an XR head-mounted display and a substitutional reality robot to create an immersive experience to the players and audiences.

- “Reframe: Recording and Editing Character Motion in Virtual Reality” — Autodesk Research will present a live demo of creating lifelike 3D character animations in VR. Attendees will use a novel VR animation authoring interface to record and edit motion. The system utilises advanced VR tracking to capture full-body motion, facial expressions, and hand gestures, coupled with an approachable intuitive editing interface.

Learn more about how the conference is shaping the future, building worlds, and pinpointing the trends of gaming with its games-focused programs by checking out the full program.